Shaping Shadows

Privacy infrastructures, mental models, and legibility

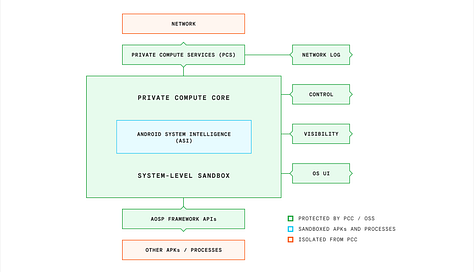

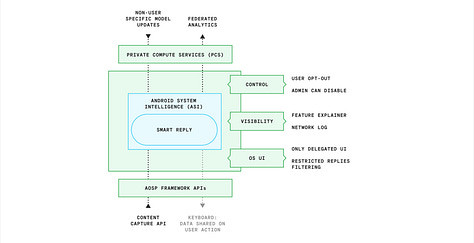

In the previous article I mentioned my hope for designers to think and act in service of better infrastructures. Here is a concrete example of creating one that runs on many of our devices.Between 2020 and 2023 I contributed to building the Android Private Compute Core and set the principles for Protected Computing at Google. Android’s Private Compute Core is a secure, isolated sandbox where continuous sensing data (such as keyboard input, microphone, and camera) can be processed on device without anyone else, including the feature developers, seeing it. These types of technologies are important because even prior to the current explosion of LLMs and GenAI many useful features of our devices required continuous sensing and processing. On top of that the Machine Learning models built to support those features require new data to improve. For this we included federated learning and analytics techniques to guarantee privacy throughout the feature lifecycle.

Developing UX, communication, and adoption strategies around these new infrastructures is challenging because they are often defying long-held mental models around how modern software works.

A mental what?

A mental model is an abstraction of how something works. Psychologist Kenneth Craik (1943, The Nature of Explanation, Cambridge University Press) described how perception constructs “small-scale models of reality” that are used to anticipate events and to reason.

Mental models allow us to compress large amounts of data and experiences into smaller, memorable, and reusable models that we can rapidly apply to new situations. As I write this it occurs to me that this is not dissimilar from how machine learning works: sample lots of data, label it, and learn an abstraction that can predict an outcome when a new, different piece of data is presented. A meta note: you have just witnessed one of my mental models in action.

Mental models are personal, but their evolution is a collective act of continuous negotiation.

You might not be a mechanic but you probably have a general sense of how combustion engines work; they have been around long enough for the majority of us to have experienced them in action, often daily; We have textbooks with schematics, movies with tropes around their failings (and quick magical fixes), and we need to answer correctly basic questions about them in order to get a driving license. So my mental model of a combustion engine is a personal aggregate of all of this and it keeps evolving. When I was a child my model didn’t include considerations of the environmental consequences of combustion, and when I was a teen my model didn’t include yet the possibility of alternative power sources.

Philip N. Johnson-Laird (2001, Mental models and deduction) defined 3 principles of mental models:

Each mental model represents a possibility

The principle of truth: mental models represent what is true according to the premises, but by default not what is false

Deductive reasoning depends on mental models

The language is fascinating to me because it links mental models to the potential (or difficulty) of seeing things in different ways. If I have a solid mental model about something it will be difficult for me to see what is counter to that model, but if someone were to suggest the possibility of an alternative I might have the possibility to evolve my model. So mental models are both cages and keys to unlock them.

When it comes to privacy there are fundamental mental models that predate current computing technologies and make evolving these infrastructures more challenging. Even before we had to deal with computers and digital services we all learned and applied a simple rule of thumb when dealing with other people: to get something useful from another person, we often need to share with them some personal information… It is difficult to have a mechanic fix our car without knowing its model and year and how much we drove it. It is difficult to get a loan without a banker knowing about our savings, job, and plans. It is difficult to get a doctor's opinion without sharing our symptoms or lab test results. And the list goes on, yet the pattern is the same. Personal information is a necessary aspect of most transactions, without it nothing moves. We generally accept this, unless we suspect or know that the other party will use this information in a malicious or simply asymmetrical way, gaining more from it than us.

That is why we all learn techniques to vet other people’s intentions and develop a sort of spidey-sense for when we might be at risk of sharing too much. It is a matter of expectations and balance. We want information to be used as expected and we want to feel we are entering a balanced exchange where both parties get comparable benefits. Helen Nissenbaum brilliantly described this as Contextual Integrity.

Enter computers and digital services and the same applies: without revealing a bit of personal information it is difficult to get something useful in return. Except technology evolves faster than our mental models and infrastructures like the Private Compute Core challenge this do ut des mental model and posit that a mutually beneficial exchange can happen even when information is not shared between the two parties. The idea in itself is simple and appealing, but in practice it requires changing drastically how software is built and used today, or even better, evolving how we think software should be built and used since the assumptions we make when using a digital service are also likely the assumptions developers made when developing it: like the spice, data must flow.

Given the principles highlighted before, a mental model is not something you can simply erase or update. To evolve a mental model is to show the possibility of an alternative by providing concrete proofs of it, and let our brains do their deductions. I think we should do this by making systems such as the one I worked on more transparent, inspectable, and eventually verifiable or, in a single word, more legible.

Legibility

First published in 1998 the book Seeing Like a State, How Certain Schemes to Improve the Human Condition Have Failed by James C. Scott introduced the term ‘legibility’ as one of the key mechanisms of the modern state to homogenize and operate society.

To make something legible means to make it understandable and quantifiable, thus manageable at scale. The introduction of surnames, standard units of measurement, censuses, and official languages are ways to make the complex realities of large territories and swaths of population legible, albeit at the cost of homogenization and loss of critical details.

The author does a great job at detailing the tension between the large-scale improvements and the failure caused by the centralized, top-down approach of high-modernist ideologies. It seems we could learn to do better the next time around and in this particular case the notion of legibility is associated with the erasure of local diversity, cultures, and expertise.

As a designer the term ‘legibility’ caught my eye because to many of us it means something else. In typography, Legibility represents the level of ease with which characters can be perceived by readers. Many factors determine it: shape, stroke contrast, angular size, tracking, kerning, etc. as Ilene Strizver says, “The legibility of a typeface is related to the characteristics inherent in its design” (Type Rules: The Designer’s Guide to Professional Typography, 2010).

This inspired me to further consider the notion of legibility when applied to systemic design. That is to force legibility to the systems we design as a way to make them more transparent and comprehensible. This is less about flattening complexity into a single schema and more about opening a system up to inspection, critique, maintenance, and improvement. I have been deliberately using the term legibility instead of the ubiquitous ‘transparency’ because I think it adds a specific requirement of comprehension not necessarily included in our current notion of transparency. I can be transparent about something, but I might not make any particular effort to make it comprehensible.

We could try an adapted definition of legibility for our systemic design purposes:

In human-made systems, legibility represents the level of ease with which a system and its components can be understood.

There are two interesting aspects to a legible system:

Legibility is always directed as it doesn’t exist without a specific subject. To whom is something legible?

Legibility is proxy for power and agency, as being able to ‘read’ a system equals to being able to critique, improve, repair, and maintain it.

As such legibility is an intrinsic property of any human-made system since each system is at least legible to its creator/s. But we know this is not enough. Think about a legalese-dense terms of service statement, or poorly annotated code, or a messy, unlabeled electrical panel; these are all perfectly legible to the experts that created them but eventually opaque, frustrating, and even dangerous for many others.

The role of design in system legibility

As designers we need to increase the legibility of systems and infrastructures and in some cases this requires going against another mental model I suspect many of us have internalized for a long time: extreme simplification as a hallmark of good design.

I am not arguing simplicity is always bad and we should instead resort to a maximalist approach. I am arguing we often highlight design’s ability to remove complexity but sometimes forget a critical nuance in how we go about it.

Designers can aim at hiding the complexity by swiping it under the carpet of a simple UI that might make something feel like magic (I guess you can imagine how I feel about this type of design). Or design can aim to simplify complexity into something that can be intuitively understood and inform a better mental model about the underlying system.

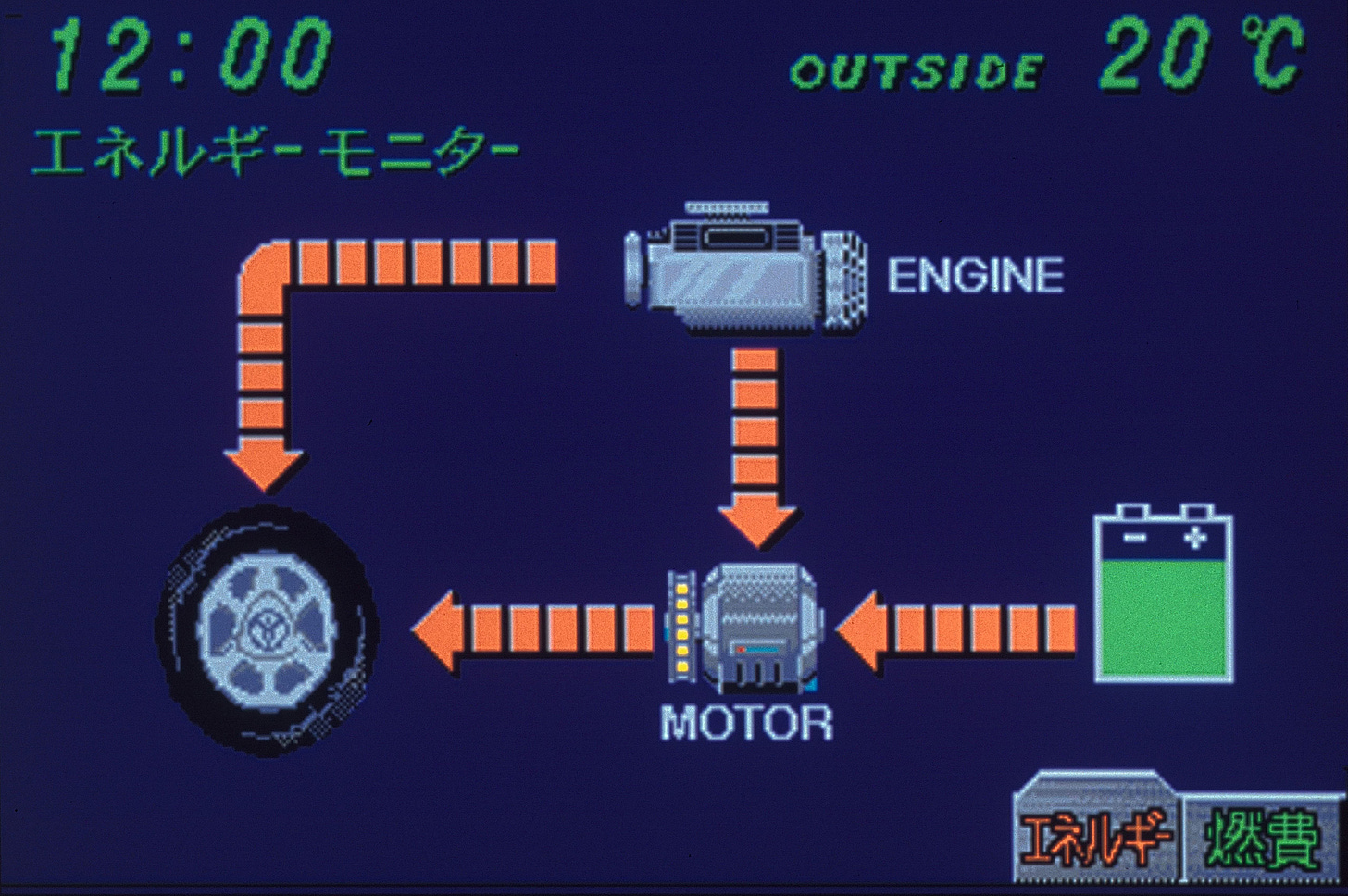

Reconnecting with the combustion engine mental model from earlier in the article, Toyota has for many years provided a good example of this: its Energy Monitor UI has evolved to become one of the identifying features of its hybrid cars and I suspect it has played a crucial role in setting a simple, yet accurate mental model of how regenerative braking works for many.

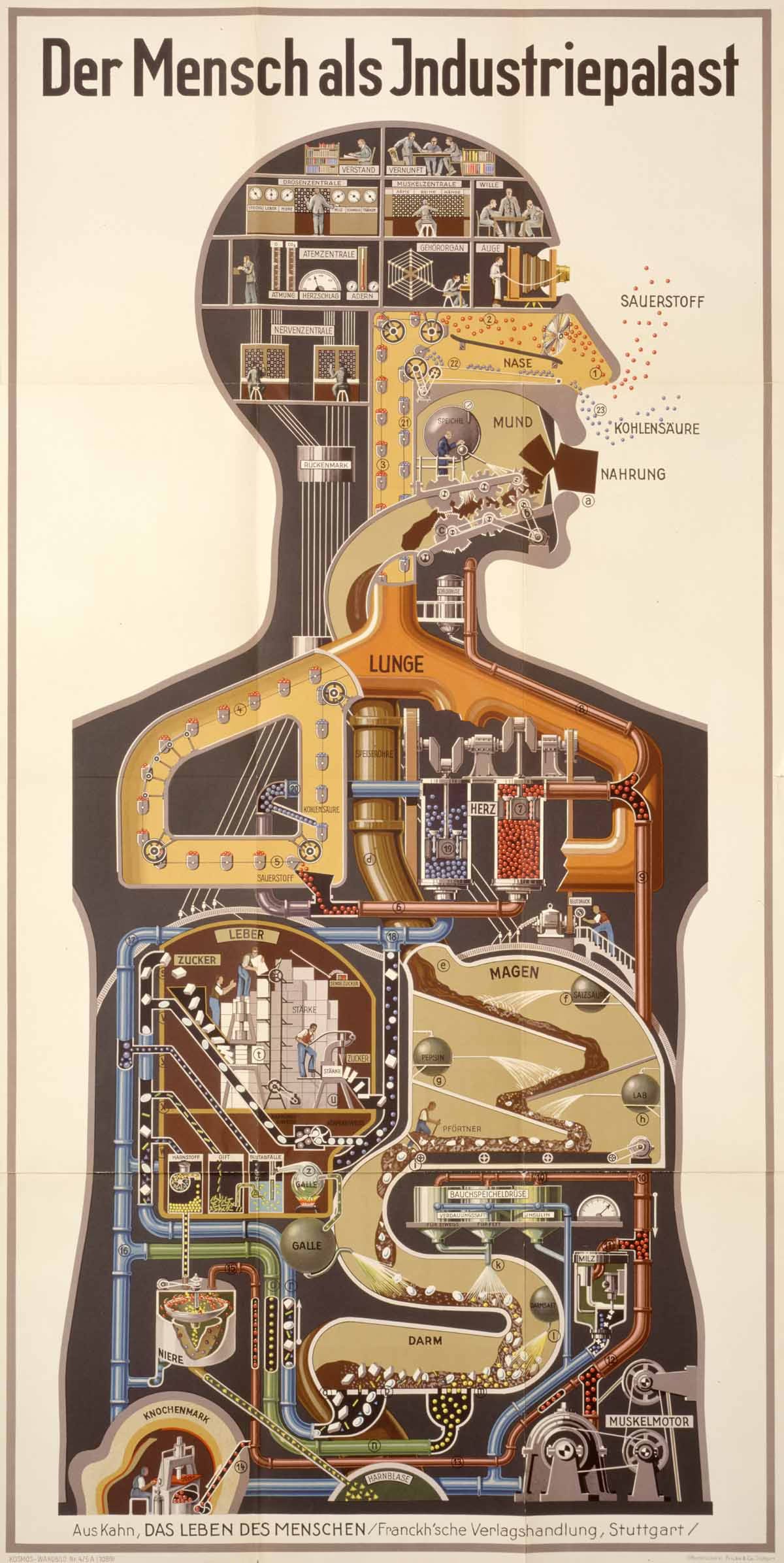

Design has a long history of infographic and data visualization and a classical reference for when the data to be visualized in the behavior of the system itself is Fritz Kahn. His Factories of the Human Body tables has created a precedent for the use of analogy as a tool for applying known mental models (like film cameras or conveyor belts) to different, perhaps less legible, systems like the human body.

What would it mean to apply this approach to the design and development of digital systems and infrastructures?

Understand how the underlying system or technology works. An obvious step but there isn’t much to make legible if we cannot read it ourselves.

Make system legibility an explicit requirement of the design and consider it for all actors involved.

Apply infographics and data visualization techniques to translate complex and invisible systems into understandable ones.

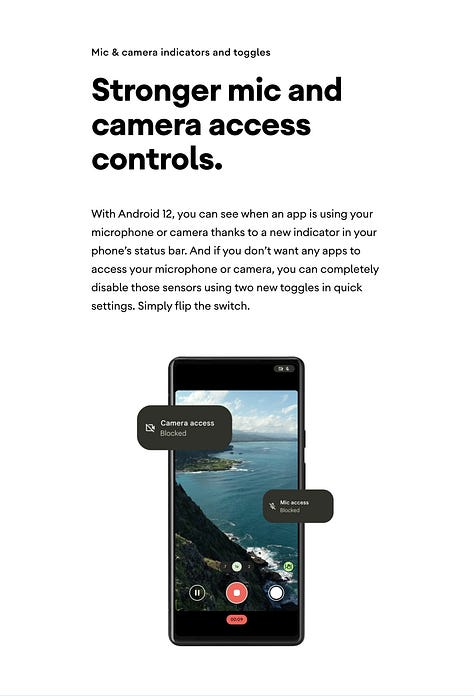

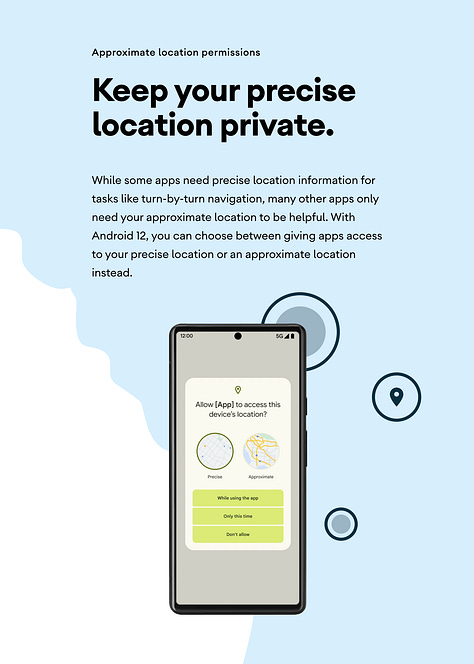

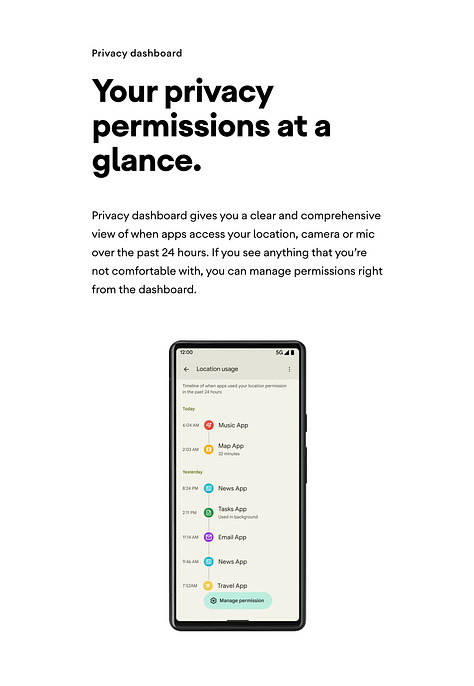

Design beyond the product surface: invest time in building live indicators, inspection dashboards, explainers and simulations to help all actors monitor and understand the system.

Expand UX research methodologies and metrics to measure the longitudinal impact on comprehension of a design: if we only optimize for immediate usability and delight the magic button will regularly win but it doesn’t mean that we are helping people become more savvy.

Applying these principles to the design and implementation of the Android Private Compute Core has been a big part of my work in the last few years.

Through explainers, reference design implementations, open sourcing, and more legible UX for end users and developers we managed to improve the Android privacy infrastructure and in recent years OSes have made good progress with live privacy indicators, dashboards and fine privacy controls - you can read the whole Android Private Compute Core story here or check the white paper and the source code - but why stop at Privacy? I hope these considerations on mental models, their evolution, and the importance of system legibility can help improve other critical infrastructures.

References:

Helen Nissenbaum. Privacy as Contextual Integrity, Washington Law Review 119 (2004)

Kenneth Craik (1943). The Nature of Explanation, Cambridge University Press

The cover photo is a detail of an Isamu Noguchi sculpture from his NYC museum.